Model-based Multiple Object Tracking Using Capacitive Proximity Sensors

Published:

From Oct. 2018 to March. 2019, I was with M.Sc. Hosam Alagi and Prof. Dr.-Ing Bjoern Hein to develop an object tracking method on capacitive proximity sensor.

Published:

From Oct. 2018 to March. 2019, I was with M.Sc. Hosam Alagi and Prof. Dr.-Ing Bjoern Hein to develop an object tracking method on capacitive proximity sensor.

Published:

From Apr. 2019 to Aug. 2019, I was with other two master students to develop one LiDAR based multiple extended object tracking framework with UKF filter at Intelligent Sensor-Actuator-System (ISAS). And the motion and observation models are learned from training examples using Gaussian Process(GP).

Published:

From Nov. 2018 to Dez. 2019, I was the student assistant at Institute for Anthropomatics and Robotics (IAR) - Intelligent Process Automation (IPR) with Prof.Dr.-Ing Torsten Kroeger and M.Sc. Woo-Jeong Baek, where we focused on the safe interaction between robotics and human and safety sensor configuration.

Published:

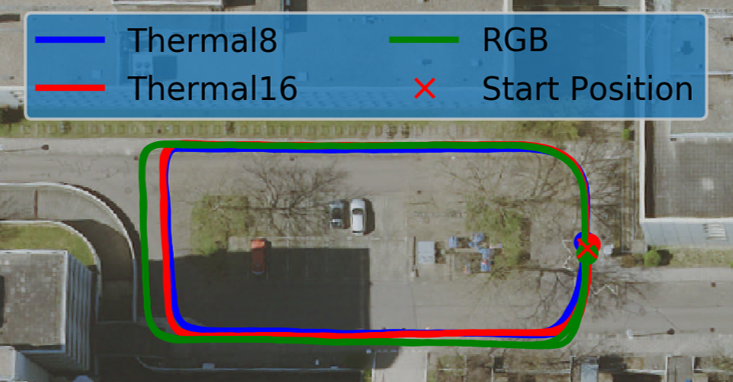

From Nov.2019 to June.2020 I am working with Prof. Dr.-Ing. Gert F. Trommer and M.Sc. Christopher Doer to develop a Thermal-Inertial Odometry framework to enable stable and robust motion estimation in the visually-degraded environment, like dark evening.

Published:

This repository implements an all-in-one and ready-to-use LiDAR-inertial odometry system for Livox LiDAR.

<img src=’/images/project/pjlab_multi_bag.gif’ style=’zoom:40%;>

Published in ITG-Fachtagung Sensoren und Messsysteme 2019, 2019

In this contribution, we present two approaches yielding an automatic generation of the optimal sensor configuration in a defined human robot collaboration scenario with respect to the compliance of the human operator’s safety and the minimum floor space consumption in the working cell.

Recommended citation: Baek, W.; Huang, S.; Ledermann, C.; Kröger, T.; Kirsten, R. 2019. 20. GMA/ITG-Fachtagung Sensoren und Messsysteme, Nürnberg, 25.-26.Juni 2019. Proceedings, 93–99, AMA Service GmbH, Wunstorf. https://www.ama-science.org/proceedings/details/3383

Published in IROS 2019 Workshop, 2019

In this work, an object tracking method on capac-itive proximity sensor is presented. Arranged as a vector and installed in the sidewalls of a work table with a robot, the sesensors are able to detect proximity events in the near field of the workspace, including human approaching the table or interacting with the robot.

Download here

Published in ArXiv, 2022

In this paper, we provide a literature review of the existing multi-modal-based methods for perception tasks in autonomous driving. Generally, we make a detailed analysis including over 50 papers leveraging perception sensors including LiDAR and camera trying to solve object detection and semantic segmentation tasks. Different from traditional fusion methodology for categorizing fusion models, we propose an innovative way that divides them into two major classes, four minor classes by a more reasonable taxonomy in the view of the fusion stage. Moreover, we dive deep into the current fusion methods, focusing on the remaining problems and open-up discussions on the potential research opportunities. In conclusion, what we expect to do in this paper is to present a new taxonomy of multi-modal fusion methods for the autonomous driving perception tasks and provoke thoughts of the fusion-based techniques in the future.

Download here

Undergraduate course, University 1, Department, 2014

This is a description of a teaching experience. You can use markdown like any other post.

Workshop, University 1, Department, 2015

This is a description of a teaching experience. You can use markdown like any other post.